Group and Shuffle: Researchers at HSE University and AIRI Accelerate Neural Network Fine-Tuning

Researchers at HSE University and the AIRI Institute have proposed a method for quickly fine-tuning neural networks. Their approach involves processing data in groups and then optimally shuffling these groups to improve their interactions. The method outperforms alternatives in image generation and analysis, as well as in fine-tuning text models, all while requiring less memory and training time. The results have been presented at the NeurIPS 2024 Conference.

The larger the neural network, the more challenging it becomes to quickly adapt it to a new task. Retraining a model from scratch is a time-consuming and costly process. Therefore, developers seek cost-effective ways to adapt a model to a specific task while preserving the overall quality of the original.

One such approach is fine-tuning using orthogonal matrices, which, unlike other methods, preserve the essential features of the original model. Popular alternatives, such as block-diagonal or butterfly matrices, have drawbacks: they are either limited in scope or require extensive computations.

Researchers at the HSE Faculty of Computer Science and the AIRI Institute have proposed a new method of constructing matrices, which they call Group-and-Shuffle. Instead of working with all the data at once, they divide the parameters into small groups, process each group separately, and then shuffle them together. This structure is both flexible and efficient: it enables the model to adapt more precisely to the task while requiring fewer computations and less memory.

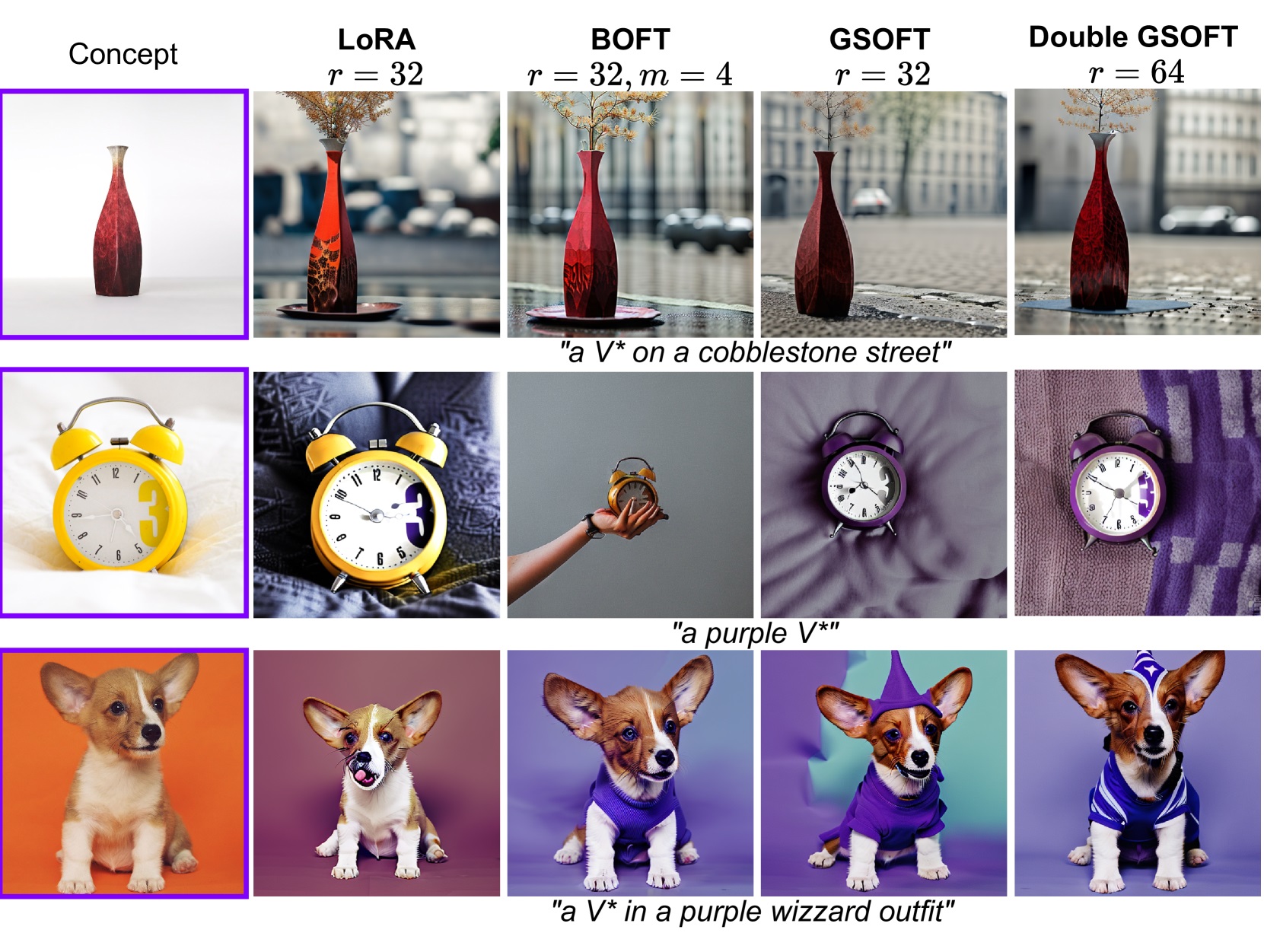

Building on GS matrices, the researchers developed GSOFT, a new method for orthogonal fine-tuning of neural networks. Unlike previous approaches, GSOFT uses fewer parameters while maintaining training stability and quality, even with limited data. The team also introduced a two-sided version of the method—Double GSOFT—which allows simultaneous adjustment of parameters from both sides, enhancing the model’s flexibility and accuracy.

'We discovered how to construct orthogonal matrices using only two special types of matrices, instead of five or six as required by previous methods. This saves computational resources and training time,' explains Nikolay Yudin, Research Assistant at the HSE Laboratory for Matrix and Tensor Methods in Machine Learning.

The researchers tested the approach on three types of tasks. When fine-tuning the RoBERTa language model, the method outperformed others while using a comparable number of parameters. In image generation, where the model needed to preserve the original features while adapting to the user’s request, GSOFT and Double GSOFT outperformed popular methods like LoRA and BOFT, all while using less memory and training time.

The authors also tested their approach on convolutional neural networks, which are commonly used for image and video analysis, such as in face recognition. The team adapted the GS matrices even for cases where the model required strong resistance to interference and distortion.

'We tested the method across various scenarios—from language and generative models to robust convolutional networks. In every case, it performed reliably while using fewer resources. This confirms that the method can be applied effectively to a variety of purposes,' comments Aibek Alanov, Senior Research Fellow at the Centre of Deep Learning and Bayesian Methods, AI and Digital Science Institute, HSE FCS, and leader of the Controllable Generative AI team at FusionBrain, AIRI.

See also:

HSE Researchers Offer Guidance to Prevent Undergraduate Burnout

Researchers at the HSE Institute of Education have identified how much time students should ideally devote to their studies, extracurricular activities, and personal life to maintain strong academic performance without compromising their mental health. An analysis of responses from 2,753 students, combined with their actual academic results, revealed several risk factors—such as excessive homework—as well as positive factors, including sufficient sleep, regular exercise, and moderate participation in projects. Based on these findings, the researchers developed practical recommendations for both students and universities. The paper has been published in the European Journal of Education.

Scientists Discover Why Parents May Favour One Child Over Another

An international team that included Prof. Marina Butovskaya from HSE University studied how willing parents are to care for a child depending on the child’s resemblance to them. The researchers found that similarity to the mother or father affects the level of care provided by parents and grandparents differently. Moreover, this relationship varies across Russia, Brazil, and the United States, reflecting deep cultural differences in family structures in these countries. The study's findings have been published in Social Evolution & History.

When a Virus Steps on a Mine: Ancient Mechanism of Infected Cell Self-Destruction Discovered

When a virus enters a cell, it disrupts the cell’s normal functions. It was previously believed that the cell's protective response to the virus triggered cellular self-destruction. However, a study involving bioinformatics researchers at HSE University has revealed a different mechanism: the cell does not react to the virus itself but to its own transcripts, which become abnormally long. The study has been published in Nature.

Researchers Identify Link between Bilingualism and Cognitive Efficiency

An international team of researchers, including scholars from HSE University, has discovered that knowledge of a foreign language can improve memory performance and increase automaticity when solving complex tasks. The higher a person’s language proficiency, the stronger the effect. The results have been published in the journal Brain and Cognition.

Artificial Intelligence Transforms Employment in Russian Companies

Russian enterprises rank among the world’s top ten leaders in AI adoption. In 2023, nearly one-third of domestic companies reported using artificial intelligence. According to a new study by Larisa Smirnykh, Professor at the HSE Faculty of Economic Sciences, the impact of digitalisation on employment is uneven: while the introduction of AI in small and large enterprises led to a reduction in the number of employees, in medium-sized companies, on the contrary, it contributed to job growth. The article has been published in Voprosy Ekonomiki.

Lost Signal: How Solar Activity Silenced Earth's Radiation

Researchers from HSE University and the Space Research Institute of the Russian Academy of Sciences analysed seven years of data from the ERG (Arase) satellite and, for the first time, provided a detailed description of a new type of radio emission from near-Earth space—the hectometric continuum, first discovered in 2017. The researchers found that this radiation appears a few hours after sunset and disappears one to three hours after sunrise. It was most frequently observed during the summer months and less often in spring and autumn. However, by mid-2022, when the Sun entered a phase of increased activity, the radiation had completely vanished—though the scientists believe the signal may reappear in the future. The study has been published in the Journal of Geophysical Research: Space Physics.

Banking Crises Drive Biodiversity Loss

Economists from HSE University, MGIMO University, and Bocconi University have found that financial crises have a significant negative impact on biodiversity and the environment. This relationship appears to be bi-directional: as global biodiversity declines, the likelihood of new crises increases. The study examines the status of populations encompassing thousands of species worldwide over the past 50 years. The article has been published in Economics Letters, an international journal.

Scientists Discover That the Brain Responds to Others’ Actions as if They Were Its Own

When we watch someone move their finger, our brain doesn’t remain passive. Research conducted by scientists from HSE University and Lausanne University Hospital shows that observing movement activates the motor cortex as if we were performing the action ourselves—while simultaneously ‘silencing’ unnecessary muscles. The findings were published in Scientific Reports.

Russian Scientists Investigate Age-Related Differences in Brain Damage Volume Following Childhood Stroke

A team of Russian scientists and clinicians, including Sofya Kulikova from HSE University in Perm, compared the extent and characteristics of brain damage in children who experienced a stroke either within the first four weeks of life or before the age of two. The researchers found that the younger the child, the more extensive the brain damage—particularly in the frontal and parietal lobes, which are responsible for movement, language, and thinking. The study, published in Neuroscience and Behavioral Physiology, provides insights into how age can influence the nature and extent of brain lesions and lays the groundwork for developing personalised rehabilitation programmes for children who experience a stroke early in life.

Scientists Test Asymmetry Between Matter and Antimatter

An international team, including scientists from HSE University, has collected and analysed data from dozens of experiments on charm mixing—the process in which an unstable charm meson oscillates between its particle and antiparticle states. These oscillations were observed only four times per thousand decays, fully consistent with the predictions of the Standard Model. This indicates that no signs of new physics have yet been detected in these processes, and if unknown particles do exist, they are likely too heavy to be observed with current equipment. The paper has been published in Physical Review D.